1) Why Web Caching?

The World Wide Web's rapid growth, coupled with the increased use of Web technology in corporate intranets, has ushered in a new era of network computing. While the pundits talk about e-commerce, virtual communities and the digital nervous system, the true hallmarks of this era are unprecedented levels of user frustration and ever-increasing WAN costs. Why? Because the Web's underlying infrastructure was not designed to support the demands now being placed on it.

While this situation is bad enough, the truth is that it's going to get worse before it gets better. As corporate users get faster LAN connections to their desktops and branch office and home users deploy faster access technologies such as 56K modems, cable modems, ISDN and xDSL, they will expect faster and faster responses from the Web and intranets. These expectations will be dashed if slow WANs and congested servers lie at the other end of users' high-speed access links. True, enterprises and service providers are deploying faster WANs and larger servers. However, this is expensive and is only a band-aid until users once-again overrun the capacity of the infrastructure and the cycle repeats itself. At some point, in the face of seemingly limitless user demand, diminishing returns come into play and the network simply collapses under its own weight. Further, fixed capacity investments are of limited value in a world where users flock to a Web site hosting the latest honeymoon video from a rock star and his actress wife one day and to different site hosting the latest Mars exploration pictures the next.

Clearly, a new architecture is needed, one that is flexible, doesn't require constant server and bandwidth upgrades, is inexpensive to deploy and maintain and easy to administer. No matter what its final form, a key element in this architecture will be implementing a distributed system that stores frequently accessed content close to users. Storing data close to users is what Web caching is all about.

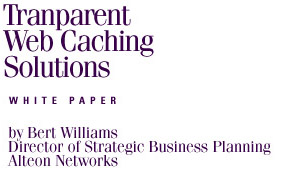

Web caching improves user response time in several ways. First, as shown in Figure 1, below, it allows users to access Web pages while minimizing the WAN traffic needed to access those pages. For corporate intranet users, this means caching content on their site's LAN so that pages don't have to be retrieved across a private WAN. For World Wide Web users, this means storing content at their ISP's POP so that pages don't have to be retrieved across the Internet backbone. In both cases, not only does the user get better service in the form of faster responses but also the enterprise or ISP benefits from lower WAN costs.

Second, Web caching, in effect, implements a distributed processing system, increasing the aggregate processing power available to popular Web pages by storing them on many servers. More aggregate processing power for a Web page translates into faster responses for users accessing the page. Third, Web caching effectively allocates processing power to the content that most needs it at that time. The more popular the content, the more likely it will be in cache. So if everyone want to see videos of rock stars and actresses one day and pictures of Mars the next, caching systems, by their very nature, accommodate this usage pattern.

2) The Ideal Caching System

What characteristics would the ideal caching solution have? Among them would be:

1) 100% hit

rate

2) Instant

user responses for cached pages

3) No unnecessary

WAN traffic

4) No unnecessary

duplication of cached content

5) 100% uptime

6) No impact

on non-Web traffic

7) No incremental

hardware or software costs

8) Zero administration

of clients, caches and intermediate systems

Clearly, this idealized system is unattainable. However, the closer a cache system gets to attaining these goals, the better it will serve both its users and its administrators.

Now, we'll examine each of these goals in more details.

The first goal is 100% hit rate. A 100% hit rate means that every time a user requests cacheable (i.e., not truly dynamic) content from a Web page, it is in the cache and the information is current. Until caching systems can read users' minds, a 100% hit rate will be impossible to achieve. However, good caching system design has a big impact on increasing the hit rate. Alternatively, poor caching system design can result in a low hit rate. One important consideration in this respect is whether or not multiple user requests for the same content are directed to the same cache when multiple caches are deployed on a site. Directing a series of requests for the same content to the same server ensures that all requests except the first can be serviced by cached content. It also ensures that the content is not prematurely deleted from caches that employ a least recently used (LRU) algorithm when determining what content to flush when the cache fills.

The second goal is to provide virtually instant responses to user requests. Part of this relates to hit rate -data in cache is returned more quickly than data that must be retrieved from the site hosting the content. However, part of it relates to other factors such as having sufficient processing power available in the cache to quickly service all requests. Cache processing power can be increased not only by deploying larger cache engines but also by offloading networking functions from the cache to the networking device the caches attaches to and by clustering caches into a cache farm.

The third goal is for the caching system to generate no unnecessary WAN traffic. That is, the caching system would not go to the WAN for content unless the requested content was not available in any local cache. Directing multiple requests for the same content to the same server helps meet this goal, as well as helping to meet the hit rate goal.

The fourth goal is that the cache does not unnecessarily duplicate content. The more unnecessary duplication of content in the system, the more disk space that is required to achieve a given hit rate, wasting money. Or, looking at things another way, for any given amount of disk space, the more unnecessarily duplicated content there is, the lower the hit rate will be, driving up WAN costs and lowering user satisfaction.

The fifth goal is for the caching system to be highly available, with 100% uptime. Depending on the type of cache system deployed, the consequence of cache system failure can be loss of Web connectivity or loss of all Internet connectivity. Cache system availability can be improved by deploying redundant caches and providing for redundancy in the network connections to the caches.

The sixth goal is for the caching system to have no impact on non-Web traffic. As will be discussed later, some caching systems have components that must be inserted into the data path of both Web-related and non-Web-related traffic. In this case, the system must minimize the impact of caching on non-Web traffic.

Seventh, the caching system should cost little. Since even freeware caching solutions like Squid must run on some server platform, no caching system is truly free. However, caching systems must be affordable compared to the benefits they offer.

Finally, the caching solution should require minimal administration. When considering how much administration is required, the administration of client browsers, the caches themselves and any required intermediate systems (e.g., switches or routers) must be considered. However, the most emphasis should be placed on client browsers because there are a lot more of them than of any other component in the system and they may be in locations that are inaccessible to administrators. For example, in a consumer-oriented ISP environment, the browsers are in the customers' homes.

3) Basic Cache System Operation

For caching to work, the cache, not the Web server, must receive users' requests for Web content. One way to make sure this happens is to configure the browsers to send requests for Web content directly to the cache. Another is for the browsers to address requests for Web content to the servers hosting the content as usual and to have some other device intercept and redirect the requests to the cache. The two cases are discussed in more detail in Section 4, Cache Deployment Options, below.

When a cache receives a request for Web content, it checks to see whether or not it has stored the requested content. If the cache has the content, it sends it directly back to the requestor. If the cache does not have the content, it requests it from the Web server that hosts the content. This server may lie somewhere in an intranet in a corporate environment or across the Internet. When the cache receives the content from the hosting server, it relays the content back to the requestor and caches the content so that future requests for the same content can be handled from the cache.

Some caches use mechanisms such as timeouts on content and checking for changes with the server hosting the content to ensure that cached content is current.

4) Cache Deployment Options

Caching systems generally use one of three deployment options to ensure that requests for Web pages go to the cache and not the server hosting the content.

The first option is called proxy caching. With proxy caching, browsers are configured to send requests for Web pages directly to the cache instead of to the Web server hosting the content.

The second option is called transparent proxy caching. With transparent proxy caching, browsers send requests for Web pages to the Web server hosting the content, as usual. The cache itself sits in the data path, examines all packets bound for the Internet, intercepting and servicing the Web traffic. In this case, the cache must be physically located somewhere in the data path, usually around the last router hop before the WAN. This requires that the cache be configured as a router hop or that the clients be configured to use the cache as their default router. If this approach is used in enterprise environments, it's best to put the cache as close to the WAN as possible so that intranet traffic can be served from the hosting servers and not the cache.

The third option is another form of transparent proxy caching that adds a component called a Web cache redirector. Transparent proxy caching with Web cache redirection removes the cache from the data path. Another device, usually a LAN switch or router running special software, sits in the data path, examines all packets bound for the Internet, intercepts the Web traffic and sends it to the cache for processing. As with plain transparent proxy caching, in enterprise environments it's best to put the Web cache redirector as close to the WAN as possible so that intranet traffic can be served from the hosting servers and not the cache.

In the following sections, we'll take a closer look at each of these options and their pros and cons vis-à-vis the ideal caching solution outlined earlier.

4.1 Proxy Caching

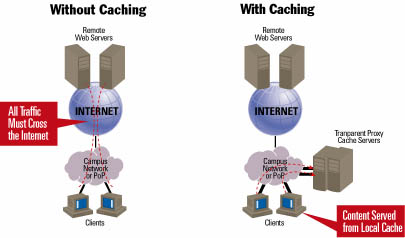

As shown in Figure 2, below, with proxy caching all requests for Web pages are sent to the IP address of the cache. For example, the client on the upper left has its browser configured to point all HTTP traffic to IP address X, belonging to the cache in the upper middle of the drawing. If the client on the upper left requests the solid or striped Web pages, the request can be serviced from the cache. If it requests the hatched Web page, the cache must send a request to host A to retrieve the information.

This approach has some advantages. First, because the clients point all Web requests (and only Web requests) directly to the cache, there is no impact on non-Web traffic. (Compare this to transparent proxy caching where the cache or a redirector must sit in the data path and look at all traffic to determine which is Web traffic.) Second, incremental hardware and software costs are limited to the server running the cache and the caching software. Depending on the specifics of the solution, these items may be purchased separately or as a bundle. Third, administration on the caches is limited to basic configuration and no administration is required on intermediate systems because none are involved.

However, client administration is a major disadvantage of proxy caching because every browser must be configured to point to the cache. As noted earlier, the amount of administration needed on the browsers has a huge impact on the overall administrative burden because there are many more browsers than there are caches or intermediate systems. Browser configuration is burdensome in a corporate environment but can be somewhat automated in some cases. It's nearly impossible in an ISP environment, where the ISP has no control over the desktop.

Another major disadvantage of proxy caching is that each client can hit only one cache server, even if multiple caches have been deployed for scalability. As shown in the Figure 2, above, if multiple caches are deployed and proxy caching is used, the browsers must be configured such that some browsers point to one cache and other browsers to another cache.

The fact that each browser points to a specific cache leads to a number of undesirable consequences. First, the content that a user desires may be cached in the local network but not in the cache to which the user's browser points. For example, in Figure 2, the client on the lower left wants access to the solid Web page. It is not on cache Y but is on cache X. Unfortunately, the client's browser is pointed to cache Y and can't get the Web page from it, resulting in a cache miss. The result of the cache miss is a lower hit rate, incremental WAN traffic and increased user delay. Some of these disadvantages can be avoided if the caches use a cache-to-cache handoff protocol. However, this functionality is not available on all caching systems.

Second, proxy caching leads to unnecessary duplication of data. For example, Figure 2 illustrates the case where clients pointed to cache X and clients pointed at cache Y have requested the blue Web page. Since the proxy caches generally cannot redirect requests for content to other caches, the blue Web page is stored on both caches, leading to unnecessary duplication of data.

Third, additional user delay and data duplication occurs because all Web traffic, including intranet traffic, must be serviced by the cache. While caching is good in cases where it prevents traffic from crossing the Internet, it can be a bottleneck in cases where the content is otherwise available in the local network.

Finally, proxy caching can cause unnecessary downtime. If a user's browser points to a cache that is down, then the user as no Web access until their browser is reconfigured or the cache returns to service.

4.2 Transparent Proxy Caching

Transparent proxy maintains several important benefits of proxy caching. Since no intermediate systems are required, incremental hardware and software costs are limited to the cost of the server running the cache and the caching software. This also means that no administration of intermediate systems is required.

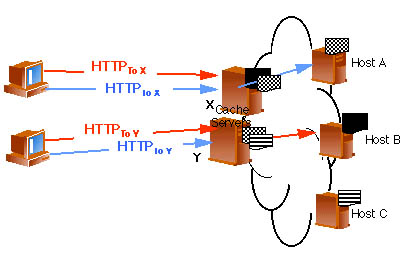

As shown in Figure 3, below, transparent proxy caching allows browsers to address requests for Web pages to the servers hosting the content. This eliminates one of the big disadvantages of proxy caching, the need to configure the browsers. With transparent proxy caching, no browser configuration is needed. (While it is true that one way to configure transparent proxy is for each client to use the cache as its default gateway, this is not incremental administration because all desktops must be configured with a default gateway under any circumstances. The only thing that changes is that the address of the cache is used instead of the address of a router.)

Unfortunately, while transparent proxy gets rid of one of the main drawbacks to proxy caching, it retains a couple and adds a couple new drawbacks of its own. One it retains is that clients generally hit only one cache because there is generally only one active path between each client and the Internet. Therefore, each client will hit only the cache on that path. As a result, all of the comments in Section 4.1, Proxy Caching, about data being cached locally but unavailable to a user because they hit the wrong cache apply here as well.

Transparent proxy caching can worsen the problem with lowered availability. The implications for availability can be worse because the cache sits in the data path. As a result, if the cache a user hits goes down, then the user loses all Internet connectivity and possibly some intranet connectivity as well, not just Web connectivity. Connectivity is restored only when an alternative path becomes operational.

One drawback that transparent proxy adds is that it can have a negative impact on non-Web traffic. Remember that all Internet traffic must pass through the cache, not just Web traffic. The cache must filter out the Web-related traffic and pass the rest on. Unfortunately, the processing architecture that works best for caches and other types of servers is not well suited to examining the internals of high volumes of data packets. As a result, transparent proxy caches can slow down all kinds of communications to the Internet because they have to process every packet to see which ones are Web traffic.

Finally, transparent proxy caches cause extra administrative work because they must be configured as routers. As noted earlier, transparent proxy caches sit in the data path and are generally configured as next hop routers or as clients' default gateway. This implies that the cache must be configured with two network interfaces, one connected to the client side of the network and the other connected to a path to the Internet. The cache must also be configured to route between these interfaces so that non-Web traffic that needs to go to the Internet can get there.

The routing, packet examination and network address translation functions of a transparent proxy cache steal CPU cycles from the primary function of the cache - serving Web pages. This reduces the overall performance of the caching system.

4.3 Transparent Proxy Caching with Web Cache Redirection

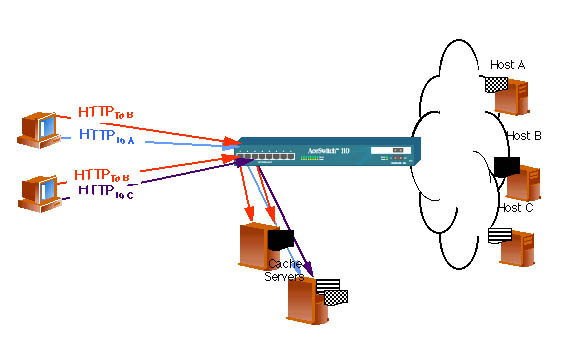

Web cache redirection is generally run on a networking device such as a LAN switch or router. As shown in Figure 4, below, the redirector sits in the data path, the cache is moved out of the data path and the network functions performed by the cache in the plain transparent proxy case are offloaded to the redirector or, in some cases, eliminated entirely. In particular, the redirector takes care of examining each packet to determine which are Web traffic, passing on the ones that aren't, performing network address translation on those that are and passing them on to the cache. If the cache redirection is performed on a router, it also handles all of the routing chores. If it is performed on a switch, the need to do routing at that point in the network is eliminated.

Adding Web cache redirection to a transparent proxy cache solution eliminates the drawbacks of transparent proxy without redirection while retaining most of the advantages. Because either a switch or a router, in either case a device optimized for examining packets, does the packet processing, it is done very quickly and there is minimal impact on non-Web traffic. Removing the packet examination function, as well as the network address translation and routing functions, from the cache frees up CPU cycles on the cache for serving Web pages - the job the cache is really supposed to do. Also, offloading these functions from the cache means that the cache doesn't have to be configured to do network address translation or routing. Since all transparent proxy systems eliminate browser configuration, adding the redirector brings caching as close to zero administration as possible.

Using a cache redirector that is separate from the caches themselves allows the client load to be dynamically spread over multiple caches, scaling process power which, in turn, can reduce response time. Unlike the previously examined cases, a Web cache redirector can receive requests from one client and spread the load over multiple servers, directing requests for content to the cache storing that content. This increases hit rate, decreases WAN traffic and user response time and eliminates the need to unnecessarily store multiple copies of content.

Web cache redirectors can also improve availability. The cache redirector is aware of the cache(s) connected to it and can perform health checks on the cache(s). If multiple caches have been deployed, the redirector can direct requests for content only to caches that have passed the health checks. Further, redundant redirectors can be deployed, eliminating any single point of failure in the system and yielding the ultimate in uptime.

The one negative

to Web cache redirection is that an additional, intermediate system must

be deployed. The redirector must be purchased and configured, increasing

modestly the expense of the solution and incurring some minimal additional

administrative burden.

5) Alteon's Approach to Web Cache Redirection

Alteon Networks pioneered the concept of server switching. The fundamental idea behind server switching is to optimize the interface between the network and the servers. With server switching, networking tasks are offloaded from server CPUs so the servers can more effectively do their intended jobs, running applications. Server switching also scales application performance by acting as an intelligent front-end processor for server farms. This enables applications to run across multiple servers in a manner that is transparent to clients. It also improves application availability by ensuring that client requests are directed only to healthy servers.

Web cache redirection is a perfect example of a server switching function. Server switches running web cache redirection act as intelligent front-end processors to cache farms, providing network connectivity, intelligently directly web traffic to caches in the cache farm and offloading network-related processing from cache CPUs.

Alteon's server switching product line contains both the ACEswitch family of Gigabit/Fast Ethernet server switches and the ACEdirector family of Fast Ethernet Internet traffic directors. For simplicity, this paper will refer to the ACEdirector. However, all of the described capabilities of the ACEdirector are also supported in the ACEswitch.

5.1 Web Cache Redirection on the ACEdirector

When configured to support Web cache redirection, the ACEdirector examines all packets going through it, redirecting Web packets with to a cache. Non-Web traffic is forwarded toward its destination using layer 2 switching.

When multiple caches are connected to the ACEdirector, directly or via hubs or switches, the ACEdirector uses its SUREFIRE advanced cache-hit algorithm to distribute packets across the caches. The SUREFIRE algorithm provides load sharing, transparently scaling cache performance for faster responses. SUREFIRE also ensures that multiple requests for the same content are directed to the same cache. This is important because it increases hit rate, decreases WAN traffic and user response time and eliminate unnecessary duplication of data in the cache farm. SUREFIRE also insures that there is minimal perturbation when caches are added or removed from the cache farm.

5.2 ACEdirector Architecture

Examining thousands or tens of thousands of packets per second, determining which must be sent on to the Internet and which should be redirected to a cache (and to which cache) requires vast amounts of processing capacity and memory. Every incoming packet must be examined to determine if it is a Web packet. Packets that are not Web-related must be sent on toward their destinations using Layer 2 switching. For each Web packet, the SUREFIRE algorithm must be executed to determine to which cache in the cache farm the packet should be sent. After this determination is made, the packet is manipulated so that the proper cache will receive it. Additional, background processing is also necessary to perform tasks such as checking the health of the caches, exchanging keep-alive messages when hot-standby switches are used and collecting and reporting statistics for network management.

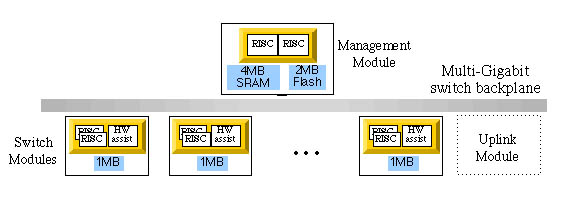

The ACEdirector's distributed processing architecture is ideally designed for processor-intensive packet examination and manipulation. Each port on the ACEdirector integrates a switching ASIC that comprises a hardware-assisted forwarding engine and dual, 90-MHz RISC processors. Two additional RISC processors provide support for switch-wide management functions. (See Figure 5.)

On each switch port, the processor in the switching ASIC handles packet examination and forwarding, execution of the SUREFIRE algorithm and address substitution. Background tasks such as cache health checking, keep-alive message exchange, and statistics gathering and reporting are handled by the central management processors. With this architecture, processing tasks for each session are distributed to different processors for parallel operations, increasing overall performance.

5.3 Cache Health Monitoring

As noted in Section 2, The Ideal Caching Solution, 100% uptime is one goal for caching systems. To facilitate this goal, the ACEdirector monitors the health of caches and directs packet only to healthy caches.

5.3.1 Connection Request-Based Health Monitoring

The ACEdirector monitors the health of caches by sending HTTP connection requests to each cache in the cache farm on a regular basis. These connection requests identify both failed caches and failed HTTP services on a healthy cache. When a connection request succeeds, the ACEdirector quickly closes the connection.

If connection request-based testing indicates a failure, the ACEdirector places the cache is placed in the "Server Failed" state. At this time, the ACEdirector stops redirecting HTTP packets to the cache, distributing them across the remaining healthy caches in the cache farm. If all caches in the cache farm are unavailable, the ACEdirector sends the requests for Web content to the server hosting the content.

When a cache is the "Server Failed" state, the ACEdirector initially performs health checks by sending Layer 3 pings to the cache at a user-configurable rate. After the first successful ping, the ACEdirector starts sending HTTP connection requests. When the ACEdirector has been successful in establishing an HTTP connection to the cache, the ACEdirector brings the previously failed cache back into service.

5.3.2 Physical Connection Monitoring

ACEdirectors also monitor the physical link status of switch ports connected to caches. If the physical link to a cache goes down, the ACEdirector immediately places that cache in the "Server Failed" state, taking the same actions as if the cache had failed connection-based monitoring.

When the ACEdirector detects that a failed physical link to a cache has been restored, it bring the cache back into action in the same manner used to restore a cache that failed connection-based monitoring.

5.4 Cache Vendor Partnerships

Web cache redirection is only part of the overall caching system. Obviously, the cache itself is integral to the solution. Web cache redirection is useless unless it works smoothly with the cache.

Alteon Networks

recognized this and has instituted partnerships with leading cache vendors

including CacheFlow, Inktomi, Mirror Image and Network Appliance. The joint

activities of these partnerships, such as interoperability testing, cross-training

of technical staff and closes ties between the sales and support staffs

of Alteon and these partners, ensure that when Alteon's Web cache redirection

capabilities are deployed in conjunction with cache products from these

partners the result will be total solution with smooth installation, easy

configuration and maintenance and high performance.

6) Summary

Web caching provides valuable benefits to end users, enterprises and ISPs. A true win-win situation, caching can increase user satisfaction by improving response times while lowering WAN bandwidth requirements and costs for enterprises and ISPs.

A variety of caching options exist in the market today. Under most circumstances, transparent proxy caching, used in conjunction with Web cache redirection, offer significant advantages over other solutions. It requires no administration of caches or browsers, offers the highest level of performance by offloading network functions from the cache and supporting user access to multiple caches and offers high availability with no single point of failure.

Alteon Networks' server switching products, the ACEdirector, ACEswitch 180 and ACEswitch 110 offer high-performance Web cache redirection. By coupling Alteon's Web cache redirection with caches from leading vendors such as CacheFlow, Inktomi, Mirror Image and Network Appliance, users can build complete Web caching solutions.